BACK TO ALL

BACK TO ALL

This is the third article from the series “A Scanning Dream”. We will discuss 3D reconstruction systems based on structured light. Such systems consist of a camera, projector, and 3D reconstruction software, but can vary greatly depending on the application - some are expensive professional hand-held scanners (Artec, EinScan), while others can scan small objects with a lower, but still useful level of detail. We will focus on the most interesting group in our opinion - affordable universal 3D scanners:

- Structure Sensor that converts an iPad to a 3D scanner.

- Structure Core which connects to PC.

- Mobile scanners based on TrueDepth sensors, starting with iPhone X and iPad Pro 3gen.

- Asus Xtion series.

- Intel RealSense series.

- Kinect for XBox 360 with PC adapter. It is discontinued but still available on the used market.

How it works

Let’s use Kinect as an example. Initially, this device was created as a game controller for XBox 360. It captured full-body motion for 2 players in real-time and was revolutionary for its time. Later Microsoft released Kinect for Windows and Kinect SDK which unlocked the hardware’s potential for general computer vision problems. Technically Kinect for Windows and Kinect for XBox 360 are identical, but Kinect for Windows has the correct business license.

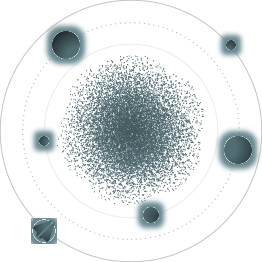

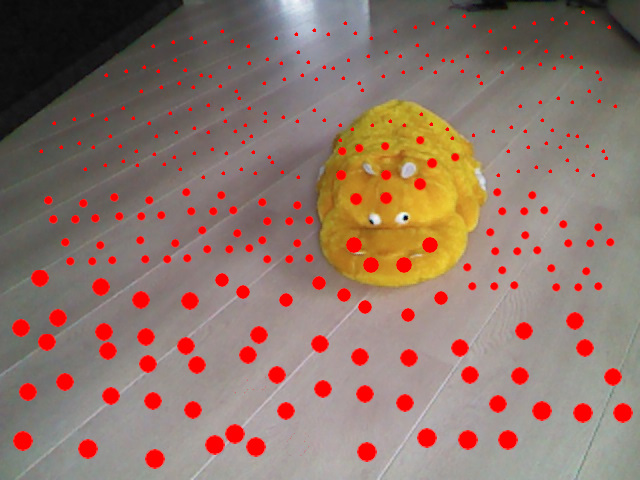

At the first stage, you should run the software, e.g. Skanect, point Kinect at the object, and then move it along a sphere around the object at a set distance. At this stage, the Kinect projects an infrared pattern of dots.

At the first stage, you should run the software, e.g. Skanect, point Kinect at the object, and then move it along a sphere around the object at a set distance. At this stage, the Kinect projects an infrared pattern of dots.

Kinect builds a 3D points cloud for the target object and a depth map for each frame in real-time by analyzing the reflected infrared pattern. TrueDepth sensors used by FaceId in iPhone and iPad use a similar infrared dots pattern too. Other structured light systems analyze the curvature of the reflected lines of light on the target surface instead of a pattern of dots. Finally, each frame of the capture is available in RGB-D format which contains a plain photo (RGB) and depth map with a distance from Kinect to each pixel in the frame.

The software reconstructs the mesh by merging several depth maps much the same way as photogrammetry - discussed in the previous article. It’s also able to texture the mesh by using average pixel values for each triangle of the mesh from several frames.

The main difference from the photogrammetric approach is that a depth map is available when the frame is captured. This is possible by using additional hardware: infrared dots pattern projector and the depth sensor. It significantly reduces the time to reconstruct the model - for example, the model above had been reconstructed in several minutes.

The model above has 2M triangles but low-quality textures due to ultra-low RGB sensor resolution of the Kinect for XBox 360. So it’s unlikely it can be used for modern VR. In any case, the model would require some additional manual processing; we will discuss this in the last article of the series called “A Scanning Dream: How to use a scanned scene or object” (will be available later).

What is required

This type of scanning requires special hardware and software. We explained how Kinect works, but it’s not our first choice due to unavailability, low texture resolution and potential license limitations. Modern hardware is recommended, for example, made by Skanect or Faro SCENE Capture.

Most of these systems require a PC for processing, but it can be less powerful than the one for photogrammetry. Structure Sensor doesn’t require a PC and provides a hand-held 3D scanner based on an iPad. You can also use a last-gen iPhone or iPad as a 3D sensor by utilizing the TrueDepth sensor, with Scandy, Bellus3D, or any other app of this kind.

Limitations and Conclusion

3D reconstruction systems based on structured light have the following limitations:

- It’s impossible to reconstruct objects with reflective or transparent surfaces.

- The reconstructed object should not be moved or deformed.

- Other light sources may interfere with the infrared dots projector.

- It’s impossible to reconstruct large objects (more than several meters).

Structured light systems are able to reconstruct objects with plain surfaces which is an advantage over the photogrammetric method. They are also better suited for scanning humans due to high speed. They don’t require the same strict light conditions to reconstruct meshes without holes or geometry issues, but good light is still important for textures. Structured light is much faster and requires a less powerful PC than photogrammetry.

Unfortunately, such systems are usable only indoors, because they are affected by direct sunlight. Moreover, only a few available solutions don’t require a PC. Most of them have a projector with limited power and depth sensor resolution, so they can’t be used to scan large objects like buildings and monuments.

The next article “A Scanning Dream: using time-of-flight camera and laser” will describe laser-based 3D reconstruction systems.