An employee of a large medical company approached us with an idea of using AR for guided recovery exercises.

People who need to go through recovery often can’t visit a specialist for every session (if at all). The client wanted to utilize AR and body detection capabilities of modern handheld devices to create an app with a set of guided exercises and spoken feedback.

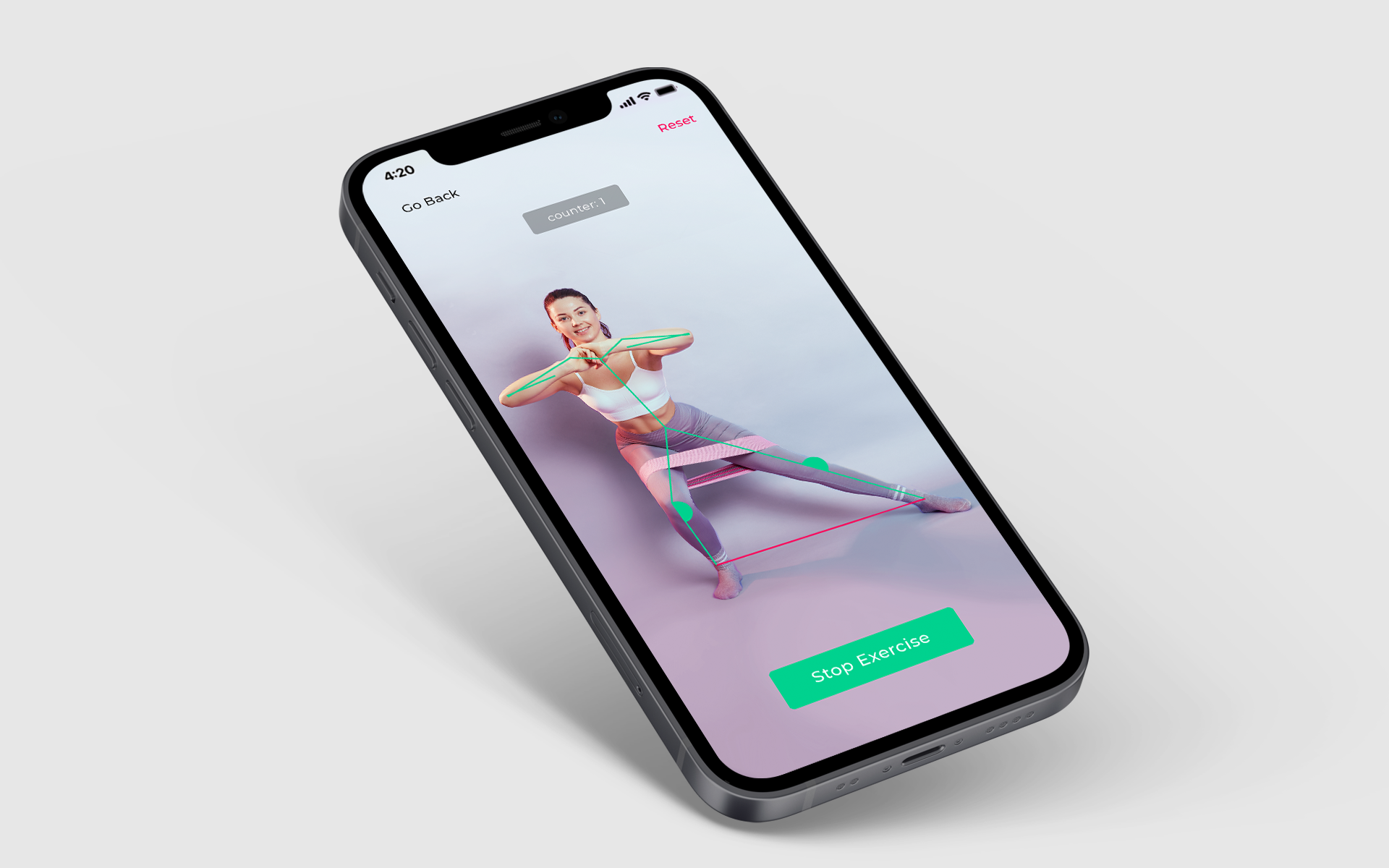

We opted for a minimal stack. ARKit takes care of detecting the body posture, SceneKit is used to draw a simple skeleton over the user’s body, and default iOS text-to-speech (TTS) is used for voice guidance. Distances and angles between bones and joints of the detected skeleton would help estimate how close the user is to the expected pose.

A set of exercises

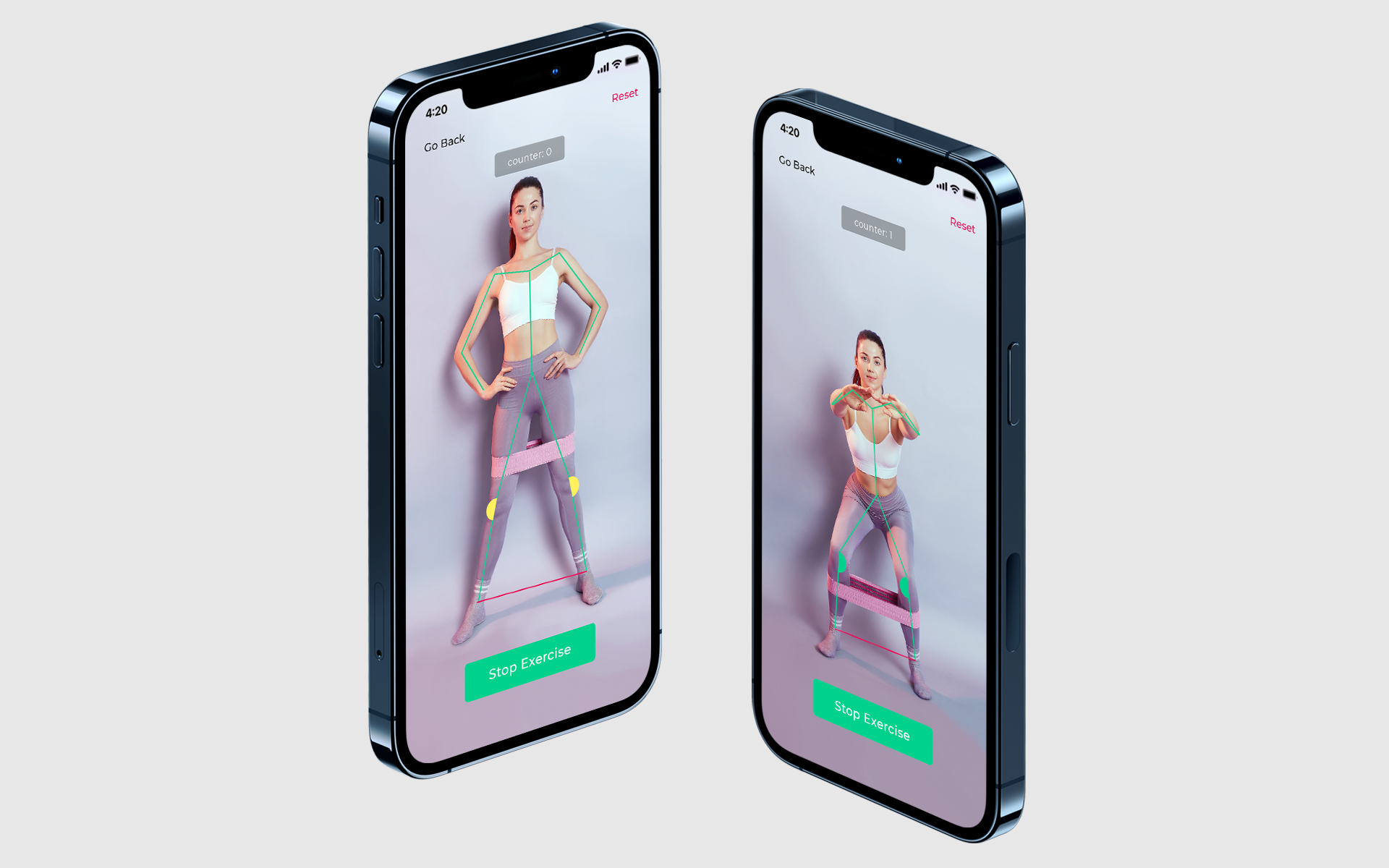

We agreed upon a set number of exercises (squats and side stretches), and manually selected a number of postures at the beginning, middle and end of the exercise. Each of these postures had a set of numbers representing body angles and distances and would be used to gauge if the user is doing the exercise correctly.

ARKit body detection

We embedded the ARKit body detection and tied the data from the detected skeleton to our benchmark values for each pose. After that we used SceneKit to render the relevant parts of the skeleton on the camera video stream.

TTS

We added guiding logic and quality of life improvements - TTS to tell the user the next step (i.e. “please sit down”, “sit down deeper”, “stand up”), a rep counter, ability to record the screen for future analysis, a tracker of previous workouts and their success rate.

Development

Minimum viable product with a set of two guided exercises and spoken feedback.